Upcoming Event

Geneva Public Portal to Anticipation: Science Anticipation Exhibition - Free to the public from 12:00 noon to 8:00 pm, 17 December 2025 to 21 January 2026 at The Qube, PMQ, Central, Hong Kong. [Details]

Firmament

2024 | Visualization Research Centre, HKBU

Project Overview

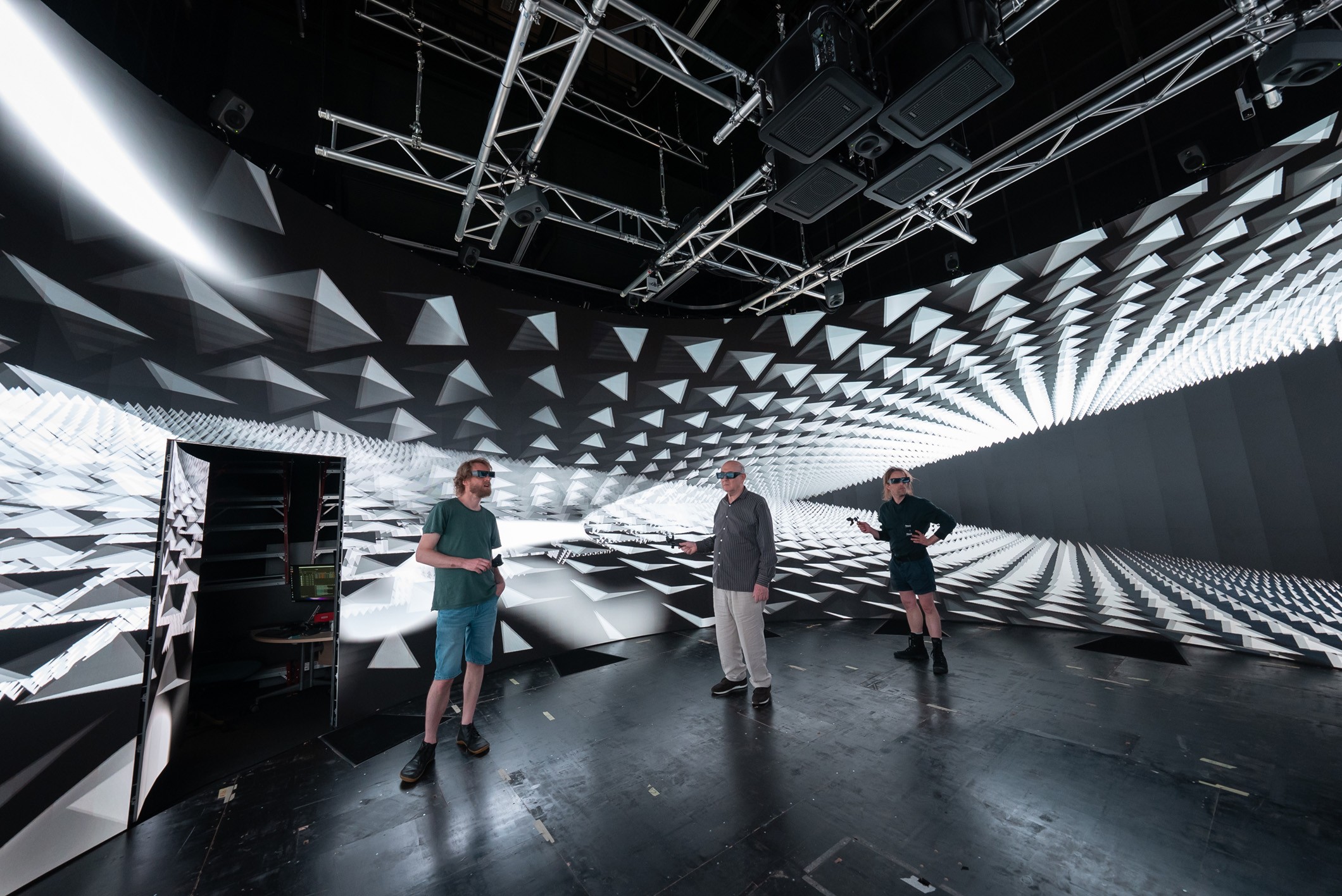

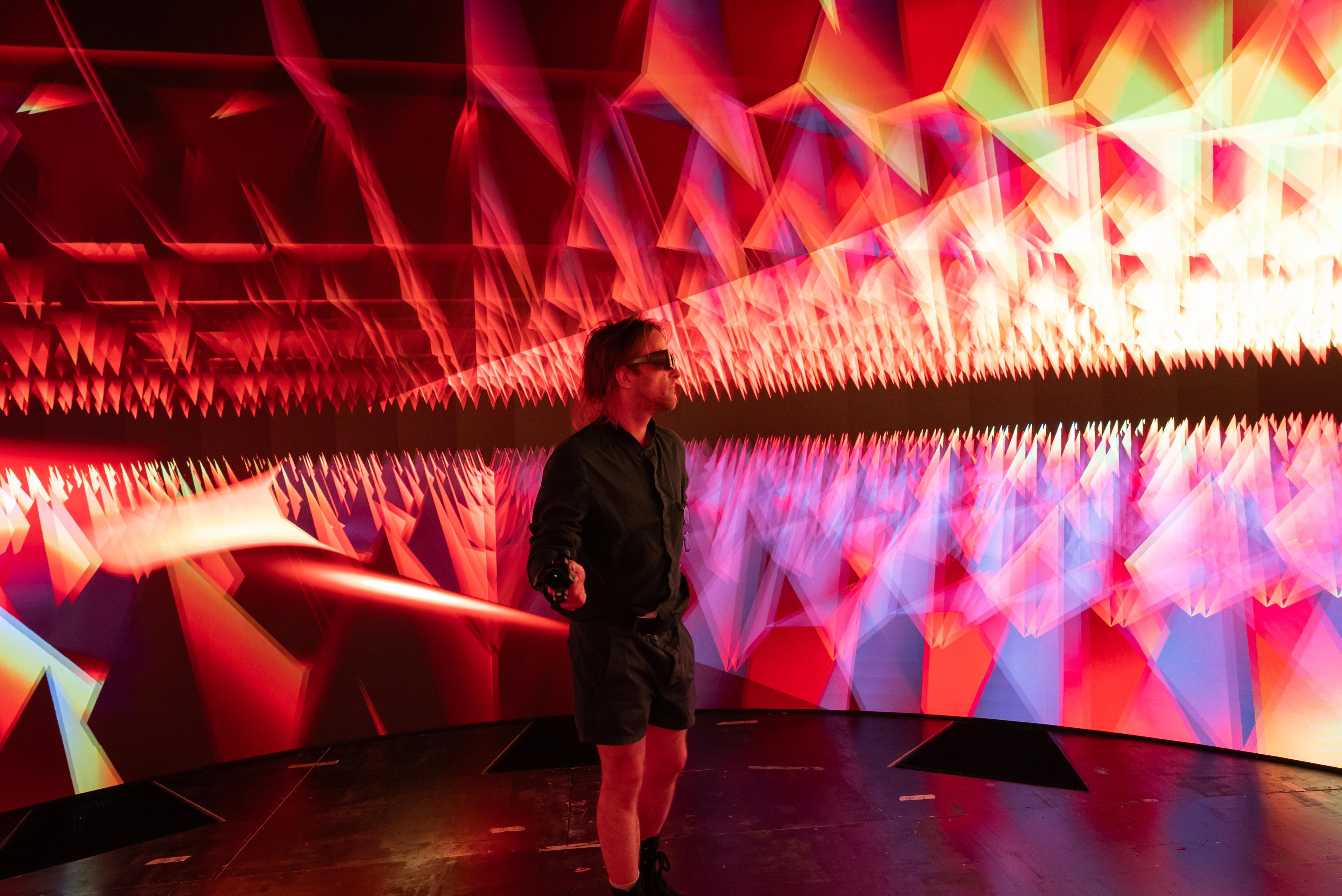

Firmament is an interactive performance that leverages real-time data on group dynamics to create immersive experiences. By continuously adapting to participants' interactions, the system generates unique outcomes within a 360-degree extended reality environment. Each performance evolves in response to collective behaviours, resulting in a tailored experience that reflects the complexities of group engagement.

The project focuses on the interplay of group behaviour, highlighting interactions that extend beyond individual actions. By observing the natural and involuntary movements of participants, the system captures subtle group formations that develop over time. These formations influence a network of interactive elements, which continuously respond and adapt to one another, fostering a dynamic and evolving experience.

Technical Narrative

As the system processes raw data in real time, it accounts for changing group positions and updates the environment accordingly. These adjustments lead to shifts in visual and auditory elements, with changes in colour gradients and sound properties—such as pitch and frequency—triggered by the average distances between participants. Additionally, the group's relative position can alter the scaling of visual objects and the layering of sonic textures, creating rich, interactive adaptations that respond to spatial relationships.

This participatory system engages participants by illustrating how collective behaviours shape the visual and sonic environment. The rhythm of inhalation and exhalation influences sequential changes, mirroring natural patterns and creating a continuous interplay between group actions and sensory responses. This evolving network of interactions reveals a spectrum of possibilities, challenges, and potentials, enriching the immersive experience.

第一部分:專案概述

《Firmament》是一個利用即時群體動態數據來創造沉浸式體驗的互動表演。系統根據參與者的互動不斷調整,於 360 度擴展現實環境中生成獨特的表演效果。每場表演都會隨著集體行為的變化而演進,為觀眾帶來量身打造的體驗,呈現出群體參與的複雜性。

此專案著重於群體行為的相互作用,突顯超越個體行為的互動。系統透過觀察參與者自然且無意識的移動,捕捉隨時間發展而形成的細微群體結構。這些群體結構進而影響一系列互動元素,這些元素彼此間不斷回應和適應,促成了動態且不斷演變的體驗。

第二部分:技術敘述

當系統即時處理原始數據時,會隨著群體位置的變動及時更新環境,從而導致視覺和聽覺元素的變化。例如,顏色漸變和聲音屬性(如音高和頻率)的變化,會根據參與者之間的平均距離觸發。此外,群體的相對位置還會影響視覺物體的比例和聲音層次的堆疊,創造出豐富的互動效果,隨著空間關係做出回應。

這個參與式系統通過展示集體行為如何影響視覺和聽覺環境,來吸引參與者。吸氣與呼氣的節奏影響著順序變化,模仿自然模式,在群體行為和感官反應之間創造出持續的互動。這種不斷發展的互動網絡展現出豐富的可能性、挑戰和潛力,進一步豐富了沉浸式體驗。

Principal Investigator: Christoph WIRTH

Partner: …

Date: 2024 - ongoing

Team: Christoph WIRTH (Concept, artistic direction, composition), Jeffrey Shaw (Artistic Advisory), Markus Wagner (Visual, XR and interaction design, lead coder), Markus Wagner (Visual Design And Code), Max Schweder (Additional coding and consulting), Olga Siemienczuk (Singer), Thorbjörn (Singer), Björnsson (Singer)

Hardware: nViz with 29.6 surround sound system

Software: TouchDesigner

2024.05.31

Premiere in Theater Dortmund, Germany

Innovation and Technology Fund, HKSAR