Future Cinema Systems

Future Cinema Systems (FCS) is a two-year research project led by Professor Jeffrey Shaw that has been awarded HKD 35.4 million by the Hong Kong Innovation and Technology Commission. In this project Hong Kong Baptist University partners with EPFL Lausanne, Switzerland (led by Prof. Sarah Kenderdine) and City University of Hong Kong (led by Prof. Richard Allen).

FCS aims to expand the cinema’s traditional areas of public engagement by bringing a new range of content under its umbrella, including virtual museums, intangible and tangible cultural heritage, big data archives and multimedia performance. The research harnesses the technological shift towards an increasingly pervasive and sophisticated engagement of the wider multi-sensory palette, whose innovations will answer the persistent and relentless desire for ever more immersive and perceptually convincing media technology. It is not restricted to the visual but encompasses all the human senses. At the heart of this development is the narrative potential of a hybrid, multi-modal space of co-evolutionary interaction between human and machine agents, whereby human intentionality will enter into a dialogue with empathetic AI. This innovative undertaking brings cinema into the 21st century, afforded by fully immersive display technologies and the prodigious real-time imaging capabilities of digital cinema, amalgamated with versatile sensing systems and the algorithmic extension of HCI.

FUTURE CINEMA SYSTEMS

Technical Research: visualisation, HCI and co-evolutionary narrative.

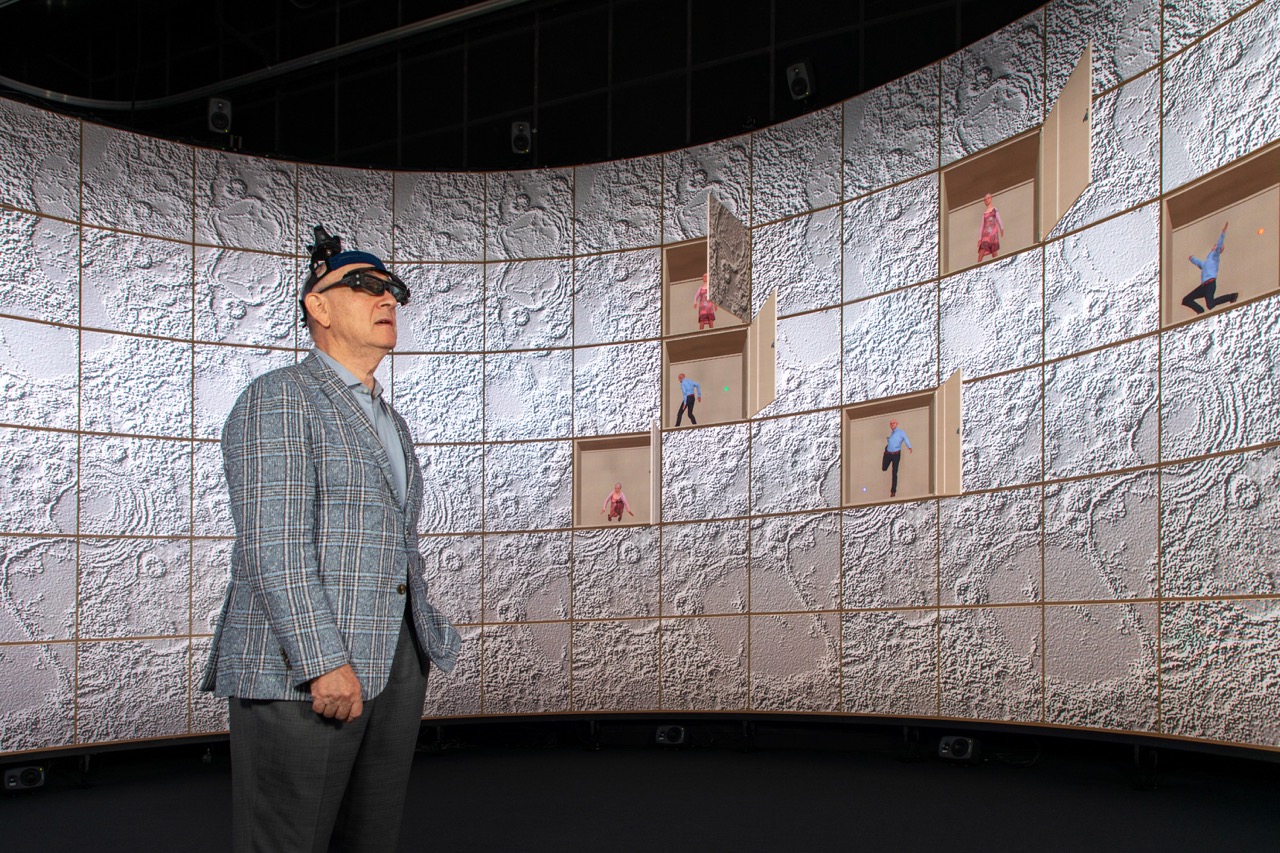

The FCS project will develop three integrated technical platforms for the staging of interactive and immersive audiovisual experiences. The first of these platforms, the Visualisation Engine will be newly built as the world’s first 360-degree panoramic stereoscopic LED screen. It provides the principal setting for developing, testing and demonstrating co-evolutionary interactivity in a convincing audiovisual mixed-reality setting.

These immersive visualisation environments combine with a second technical ensemble, the Human-Computer Interaction Engine. This consists of an integrated array of sensing inputs including a haptic interface, eye tracking, voice recognition and motion tracking, which will afford the communication of audience behaviour and intentionality towards the audio-visual manifold. The circuit of interactive and immersive experience is completed by a third apparatus, the Co-Evolutionary Narrative Engine. This comprises the software applications that will enable the audiovisual manifold to react and respond to the active and passive sensory prompts provided by participants. It provides a natural and continuous mixed reality interface between the real world and the virtual world.

Combining these three technical platforms FCS undertakes innovative research into the interactive sensory, interpretive, and performative functions of virtual agents capable of responding spontaneously to the complexities of human experience in real-time. These techniques of coevolutionary relationships between virtual agents and human participants in digital environments will enable FCS to construct demonstrators in three areas of applied research: archive, cultural heritage and performance. The benefits of FCS research lie in its capacity to satisfy the rapidly emerging appetite of the digital information society for the aesthetic, moral and emotional enrichment of digital interaction, which is the acknowledged driver of innovation in digital technology. Achievement of the outcomes involves the development of novel forms of interface, an intelligent and responsive audiovisual manifold, and scalable interactive applications.

FUTURE CINEMA SYSTEMS

Applied Research: archive, cultural heritage and performance

The FCS ARCHIVE demonstrator aims to present and demonstrate new paradigms, solutions and designs for browsing and exploring large data sets of digital content, such as but not limited to photographs, movies, 3D objects, or animated and moving compositions. Reconfigured in assemblages of such varied content, the interactive interface provides viewers with the means to navigate their own way through potentially vast archives of heterogeneous materials, and thus create their own paths and narratives.

For its CULTURAL HERITAGE demonstrator, FCS’s visualisation tools provide powerful capabilities for museum audiences to explore historical contexts and artefacts through emerging patterns, relationships and juxtapositions of elements. Disparate audio-visual data is brought together in narrative coherence, AI models are developed to assist in the generation of content- and interpretation-wise explanations and natural language processing and computer vision are leveraged to innovate the ways in which we teach, learn, and experience the world’s past.

The Atlas of Maritime Buddhism’s visualisation tools provide powerful possibilities for museum audiences to explore atlases and discover their cultural treasures through emerging patterns, relationships and juxtapositions of elements, bringing disparate audio-visual data together in narrative coherence and allowing users to create a narrative sense where the data is both spatially and temporally contextualized. New mapping practices have also defined digital cultural atlases as deep maps that can be dynamically manipulated by users, contingent upon their semantically rich, performative and discursive nature. The schema proposed in this project converts the ‘deep map’ of the Atlas from a fixed map of points, into a navigational one where everything is on the move, underpinning the user’s freedom to explore its diverse pathways while achieving the narrative coherence that gives meaning to the discoveries they make. AI models are developed to assist in the generation of content- and interpretation-wise explanations, while the latest advances in natural language processing and computer vision will be leveraged in this application to innovate the ways in which we teach, learn, and experience the world’s past and present.

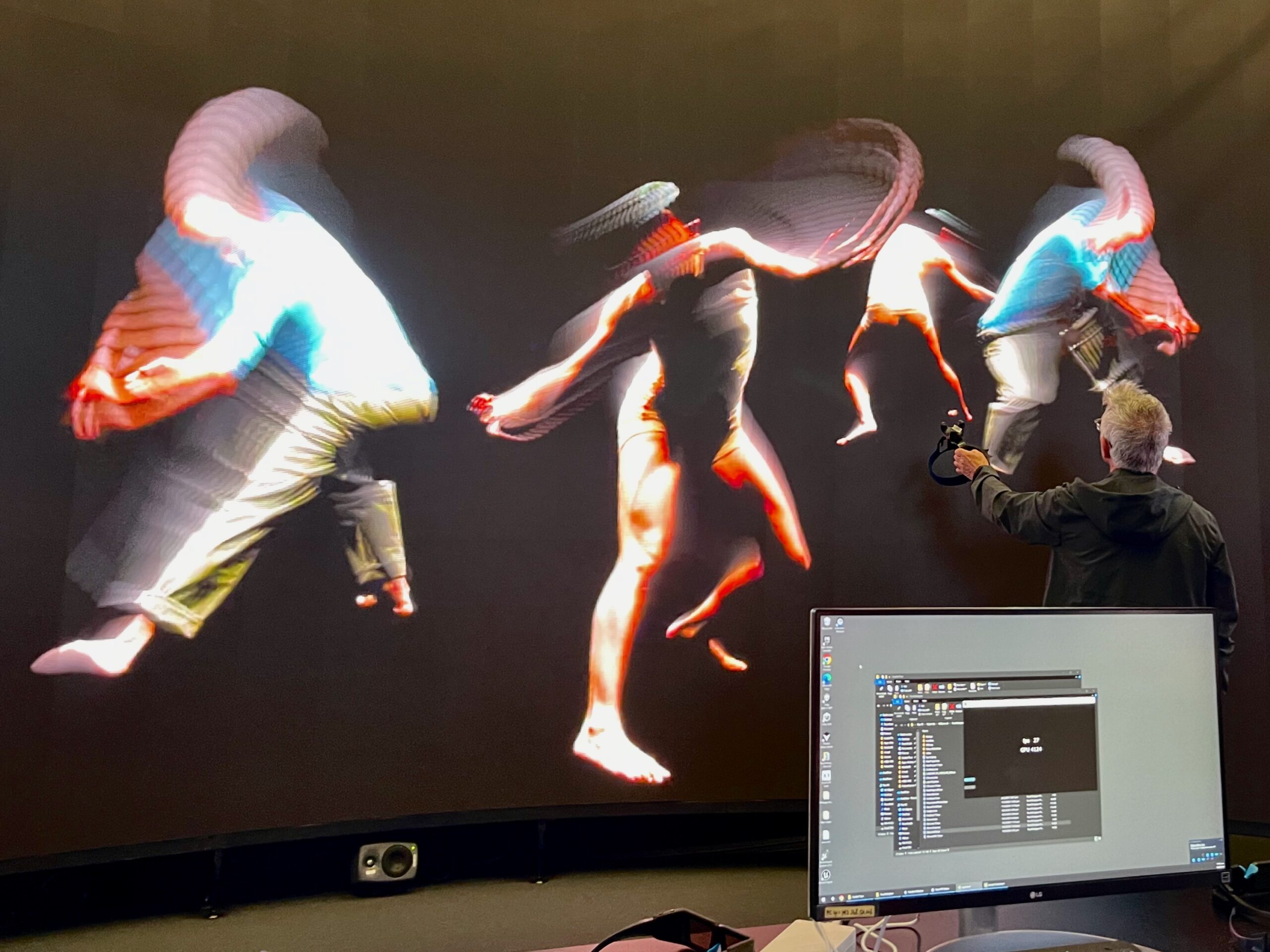

The PERFORMANCE demonstrator facilitates the next generation of performative media, including theatre, opera, dance, music and their cross-disciplines, enabling a hybrid space encompassing intelligent interaction between human and machine agents, interactive public participation, and mixed reality immersive visualisation. The objective is to place human social interaction at the centre of the experience so that narrativity, interactivity and engagement are mutually reshaped.